Jan. 15th Update

As of the Jan. 15th, 2021, it has been announced that this policy will only be deployed by May 15th, 2021.

This has been announced on their blog (archived Jan. 15, 2021), and echoed in various media, such as for example Nick Statt's article (archived Jan. 15, 2021) in The Verge.

All points in the below article are based off of factual analysis of the text, without conjecture. So unless the text of the policy changes, the previous article still holds.

Last Monday (Jan. 4th 2021), WhatsApp announced that their privacy policy would change on Monday, Feb. 8th, 2021 for their app, also named WhatsApp.

Ordinarily, this would not have had any effect, much like a drop of water falling into the ocean. However, users were presented with an interesting conundrum: if they would not consent to a seemingly innocent privacy policy change (that people usually never read anyways), their account would be deleted.

This prompted people like myself and countless others to read what had changed and subsequently recoil in horror.

DISCLAIMER: I am not a lawyer, and am perfectly able to misinterpret laws.

Summary

- The socio-historical angle.

- Defense Condition 1

- The European Conundrum

- Shot! Chaser!

- My own take on the matter

The socio-historical angle.

Welcome to the communications age! Whereas just 50 years ago one had to call an operator to be able to make a transatlantic call (or phreak it), we now live in a world where a great majority of people from varying ages posess smart-devices, and a stable Internet connection (at least in the "Western" world), and regularly communicate with these devices with one another.

*Record Scratch* freeze frame: "So, you're probably wondering how we got here".

Well, most sources place the emergence of smartphones in the mid to late 2000's, but what really kicked off their widespread use were touchscreens (such as the LG Prada in 2006 or the iPhone in 2007). Parallel to this was the emergence of 3G networking, allowing people to carry the power of the Internet in their pockets (but somehow collectively get stupider).

Fast-forward to 2009, and WhatsApp emerges. The concept of the service was simple: to show "statuses next to individual names of people". Fast-forward to 2014, and WhatsApp is valued at $1.5 Billion. That same year, Facebook acquires WhatsApp, making it a part of the Facebook family of companies. Nowadays, WhatsApp has more than 1 billion users and is a cornerstone of modern communication capabilities (text, phone, video), especially relevant during a pandemic (COVID-19) for people to stay in touch.

Defense Condition 1

However, a few days ago, on Jan. 4th 2021, WhatsApp announced changes in their privacy policy (from the last version (Dec. 19th, 2019 | archived Jan. 12th, 2021) to the current version (Jan. 4th, 2021 | archived Jan. 11th, 2021)), as well as a pretty clear cut message: "Accept, or GTFO".

The change could be seen in a singular modification, which was the removal of the sentence "Respect for your privacy is coded into our DNA."

"Information we collect"

One of the first things that jumped out was that WhatsApp originally asked for a phone number, some basic information as well as also require that users "provide us [WhatsApp] with the phone numbers in your mobile address book on a regular basis", without any additional information as to the modalities of such exchanges of information (a pattern that will seem familiar by the end of this post), as seen in Fig. 1.

With the new policy change, WhatsApp seems to raise the ambiguity of the problem, still wanting uploads but also "managing the information in a way that ensures those contacts cannot be identified by us [WhatsApp]", which is ridiculous because a phone number is already enough PII (Personally Identifiable Information) to partially identify someone (see Fig. 2).

But this is only the tip of the iceberg, and also the only one where WhatsApp bothers to ask for one's informed consent (which one gives by blindly pressing "I agree").

When it comes to Automatically Collected Information, we get into muddier waters.

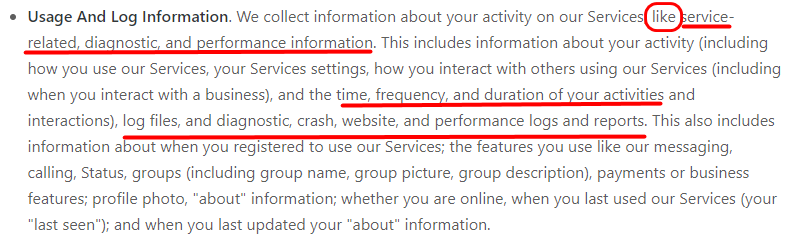

A part of that is the Usage and Log Information section, wherein WhatsApp used to only collect "service-related, diagnostic, and performance information" (see Fig. 3).

In the new iteration WhatsApp collects information "like service-related, diagnostic, and performance information.", and then proceed to give some precise examples (such as "the time, frequency, and duration of [one's] activities", or "log files, and diagnostic, crash, website, and performance logs and reports"). The word like in this context is deeply disturbing (to me, again: not a lawyer), because it seems to me that it entails that it is an example (and thus a logical subset) of the types of information WhatsApp collects, which, in addition to the log files, can be a serious breach of users' privacy. We yet again do not know the uses for all of the information that is collected. (see Fig. 4).

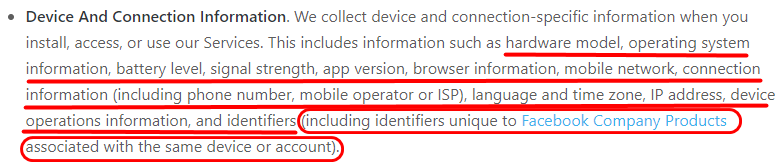

In addition to these elements, WhatsApp has consistently admitted to collecting "device and connection-specific information [...] such as hardware model, operating system information, battery level, signal strength, app version, browser information, mobile network, connection information (including phone number, mobile operator or ISP), language and time zone, IP address, device operations information, and identifiers (including identifiers unique to Facebook Company Products associated with the same device or account)" ( Fig. 5).

The original (without the purple) was already a quite invasive amount of information that was continuously harvested.

The newest addition (in purple) is cause for even more concern, because it effectively means that all of your data tethered to one phone that are a part of the Facebook monopoly of applications (WhatsApp, Facebook, Messenger, Instagram, Oculus, etc.) can account for most of your phone usage (as per the statistical increase of the presence of social media in people's lives reflected in this article by the Centraal Bureau voor de Statistiek aka. The Dutch National Statistics Bureau).

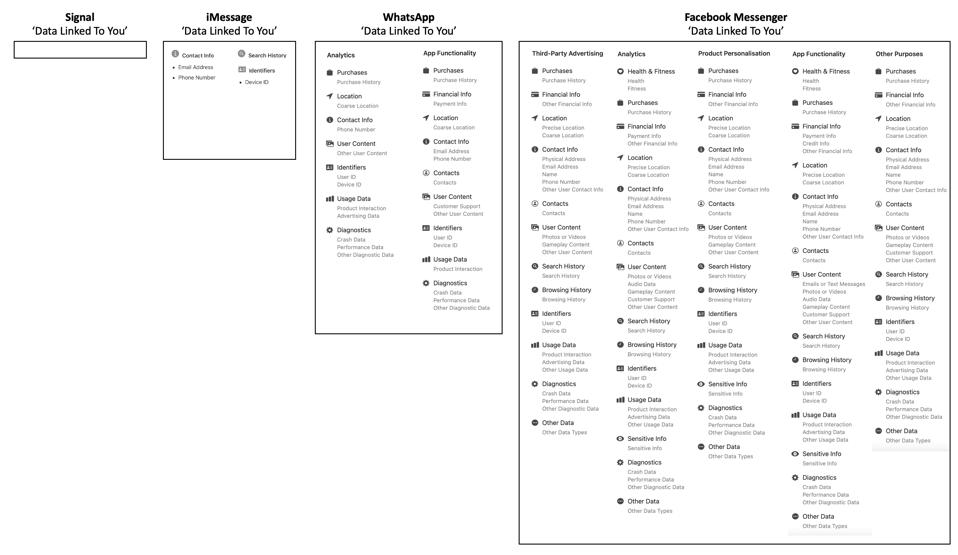

Zak Doffman published an article in Forbes comparing the various information that Facebook and WhatsApp collect about people, summed up in the following graphic.

It could be tempting to dismiss the breadth of PII collected by WhatsApp in the face of the amount of PII collected by Facebook Messenger, but when comparing the data collected with the likes of Signal and iMessage, one can ask themselves how services that do virtually the exact same thing could have so many varying requirements, and how one can -- in the long run -- reduce their digital footprint.

"Third Party Information"

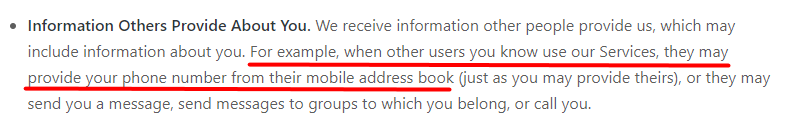

We saw that WhatsApp now regularly acquires user's contacts, usually from one's address book.

Originally, the only thing that one provided was "[one's] phone number from [another's] mobile address book", whether you were registered or not with WhatsApp (see Fig. 7).

With the new update however, it isn't just someone else's phone number you provide, but "[one's] phone number, name, and other information (like information from [another's] mobile address book)" (see Fig. 8). That last bit is a clear increase in the scale of information collected about other users, and we still don't know why all these data are being collected for.

Another point in the new policy relates to liability, as no one knows whether or not they actually have "lawful rights to collect, use, and share" one another's information, or if they could be sued for having someone else's phone number in their address books.

Think about it, we all store varying amounts of information regarding other people in our address books.

Imagine this in an academic context, or an enterprise context, whether having added someone to your contacts 6 years ago makes you vulnerable to a lawsuit. Even worse, what happens if the number that was once associated to a friend of yours gets recirculated for someone else ? Could this person sue you ? Apparently yes.

Example

You meet a representative called Alice E. (fictional character) at a business convention, in relation to their work as a senior threat analyst at a cyber security company named ExampleCyberz (fictional company). You get off on the good foot, and would someday perhaps apply at ExampleCyberz for a job. So, you store Alice's last name, first name, company, business number and business email.

Someone else, for example Alice's sibling, has Alice's personal and business phone numbers and email address (to be able to reach them in case of an emergency).

From those 2 contact points it is feasible to determine that both Alice's are the same person, tie that user's Facebook profile to a specific company.

However, a person is hardly ever known by only 2 others. And that's where we enter the realm of "Big Data" (which is in itself deserving of a few more blog posts).

Defense Condition 1: TL;DR

- WhatsApp used to collect the name, the phone number and users contacts regularly.

- They still do, but with more ambiguity as to the use cases.

- WhatsApp used to only collect "service-related, diagnostic, and performance information"

- WhatsApp now collects information "like service-related, diagnostic, and performance information.", where the insertion of the word "like" is a striking choice (again: not a lawyer).

- WhatsApp used to collect a staggering amount of hardware data (hardware model, OS, IP address, etc.)

- WhatsApp collects the same data as above but also identifiers used by other Facebook apps on the same device, effectively tethering accounts to a piece of hardware and linking all of the collected data across the services offered by Facebook.

- WhatsApp used to scrape all of the phone numbers in one's mobile address book.

- WhatsApp now scrapes the entire address book and assumes your have the legal authority to share the contents of it.

The European Conundrum

When it comes to European citizens, WhatsApp is correct in saying that certain policies don't apply (aptly choosing to provide an alternate policy (archived Jan. 12th 2021) for citizens of the European Economic Area).

They collect the same amount of information, but seem reassuring as to how this information will be shared with third parties (to remain compliant with the General Data Protection Regulation (GDPR)). An important notion of GDPR that seems to not be entirely understood is that it doesn't stop companies from processing users data, but rather from sharing it with third parties for them to process it for most business purposes.

Whether inside or outside the EAA, Facebook can process information collected by WhatsApp (as Facebook owns WhatsApp) for it's own analytics and advertising scheme (and more).

It comes down potentially as a way to fingerprint users, but could be used for darker prospects, such as to furnish 'shadow profiles' which are profiles of people containing information they themselves haven't necessarily furnished, such as information handed over by data brokers, as this article covering Mark Zuckerberg's (CEO of Facebook) testimony before the US Congress shows us.

These 'shadow profiles' are something that Facebook curates about people on and off of the platform, gleefully breaking the requirement for informed consent (or at least a "I agree without having read the terms and conditions" kind of consent) with information of how frequently users interact outside of Facebook. These 'shadow profiles' can potentially then be used to determine preferences and potentially display ads that they were paid to, or other more nefarious 'Big Brother'-ly purposes (without actually requiring the user to be logged in or even posess a Facebook account).

Think from the following what you will, but it seems that Facebook has recently been actively setting aside considerable amounts of money specifically for GDPR fines (as seen in this Dec. 2020 article, Facebook has allegedly put aside 302 Million Euros for that purpose).

Shot! Chaser!

Since Monday (Jan. 4th 2021), a great many articles have been pulling the alarm bell, such as for example Zak Doffman's article in Forbes.

This has led to a multitude of things, which we'll now get into.

First off, a certain number of people are migrating off of WhatsApp towards Signal and Telegram ( more on those platforms here), if the App/Play Store recommendations are anything to go by.

The triggers for this move are mostly due to privacy reasons, or to be able to maintain contact with those that deplatform, but also slightly due to the effect of certain hoaxes that misinterpret 3 year old hoaxes like the one described in this article from 2017.

Signal for example has had a huge boost in popularity after being promoted by public figures on Twitter.

A funny side effect of this gain in popularity for Signal is the increase in unrelated stocks, such as the OTC:SIGL (Signal Advance Inc.) stock prices.

More on the alternative platforms

Signal is an app controlled and developed by the Signal Foundation, a 501(c)(3) non-profit and are the developers of the open-source Signal Protocol that WhatsApp bases it's encryption off of. The Signal Protocol is being constantly vetted and is among the most secure end-to-end encryption protocols currently in existence. As for the concerns of privacy enthusiasts, Signal only require one thing from their users: their phone number.

Telegram on the other hand is owned by "Telegram Messenger Inc." and is partially open-source: the client is fully-open source but the encryption system (MTProto) is not. This has led to a bit of criticism because it is impossible to verify the security of the encryption protocol. Additionally, Telegram's chats are not end-to-end encrypted by default, but are still encrypted during transit. People should use the "Secret Chat" feature to have a fully end-to-end encrypted chat.

My own take on the matter

I have personally been wary of Facebook for a while now, having closed my accounts within the ecosystem in 2014. I have so far maintained a WhatsApp account but am planning on deplatforming before Feb. 8th 2021.

I also have Signal and Telegram accounts that I use to stay in touch with friends, as well as a presence within the Keybase, Riot and Wire platforms.

My own recommendation would be to start migrating work, recreational and class groups away from the platform, so as to be as secure and as inclusive as those that desire their privacy to be respected as possible.

I realize that it may be or seem complicated to convince friends, colleagues, children, parents and grandparents to migrate to Signal or Telegram, when they've spent the better part of the last 4-5 years using WhatsApp, and it would be especially complicated to help people migrate, since we are still living in a pandemic.

Thankfully, the Internet has it's share of kind strangers, and people such as Forbes' Senior Cybersecurity Contributor Kate O'Flaherty have published guides to migrate from one platform to another, for example this guide on how to use and migrate from one to the other.