Once more, we find ourselves at the end of a chapter of a long and ongoing adventure!

If you missed it, I am one of the managers for a community called Digital Overdose (which you can find at this link).

I do a lot of things at D.O., from managing day-to-day events, maintaining the website, organizing and running their Capture The Flag events, and finally also running the Digital Overdose Conference (dun-dun-dunnnnn"... pause for dramatic effect).

Well, this year, I organized the second ever Digital Overdose Conference, and I wanted to give you all a bit of a behind the scenes look as to how one organizes a fully virtual event like that conference!

More specifically, I'll be telling you how I do it, some of the things I do are just for flair, and honestly a bit over the top.

Summary

Organizing the event

The organization of the event usually starts a few months ahead of time, mostly around mid-December. That's when we try and set a date for the conference, as well as plan out the great "phases" that it will go through.

Generally, there are 4 phases to plan out:

- Preparation

- Talent Acquisition

- Talent Mentoring | Asset Preparation

- Conference

The Preparation phase is where we will lock dates, create the Call For Presentations form (CFP), find willing mentors for the Talent Mentoring phase, find people available to review the individual responses to the CFP, and finally attempt to have an idea about the stylistic sense of the conference.

The Talent Acquisition phase is where people will put in CFPs, and where reviewers take the time to review and grade the submissions. Where we differ from most other conferences, is that we don't ask a detailed CFP. All we need is a title, a short description and a motivation for giving the talk, which helps us root out corporate / vendor / snake oil talks. I usually don't involve myself with the CFP review process, as it'd be unfair to the participants.

The Talent Mentoring phase only concerns the speakers and mentors, and is happening parallel to the Asset Preparation phase. It mainly involves speakers working on their talks and mentors supporting them and being available for questions and such things.

The Asset Preparation phase is where people like myself generate the stream assets, such as images, videos, layouts, etc. as well as source the relevant music assets. I'll be going a bit more into that in the next section.

Then comes the conference, which is a 3-4 day period where we do setup and tests, followed by the conference, followed by the break down, including the upload of all the conference outputs to YouTube.

So that's a lot of phases with announcements, communication and the like to plan in advance.

Preparing assets

There are several assets at play when it comes to the conference (see below for some sneak peeks)! They're usually a pain to create, using a variety of tools, such as GIMP, Blender VSE, FFMPEG.

There's the promotional assets, such as the poster for the conference, and the announcement posters!

The conference cover art.

The conference cover art.Then there's the stream assets, such as the various layouts used in OBS.

Live shot of the stream assets in use, during a talk.

Live shot of the stream assets in use, during a talk. Live shot of the stream assets in use, during a break.

Live shot of the stream assets in use, during a break.Also to note are musical assets, which we source beforehand and need to integrate into the stream assets in order to make the conference smooth, and to keep the attention of the attendees.

10 second GIF of one of the audio assets.

10 second GIF of one of the audio assets.How did I make that, you ask? (Or if you didn't, that's a shame, you're still finding out

Well the initial part is an FFMPEG script (below) that takes the MP3 and generates the waveform.

The second part is transforming the .nut file to a .mp4 file, as follows:

The third part is creating a Blender VSE system that will render it. Now of course, having 25 tracks to do this to, we choose to automate everything. And it turns out Blender does accept Python for most of its context specific work!

It may take a short while to set up, but this is actual time-saving automation. Not like this tweet echoing a programming meme would have you think:

"The Day Of"

The Day Of is always a really chaotic time. Why? Because of Murphy's Law ("Everything that can go bad, will go bad"). This is why we have mitigations in place, and also avoid things getting bad!

All went well this time around, mainly due to the entire team working well together, and making sure the community was looked after.

But what did it look like on the ground?

Well, there are several issues when organizing virtual conferences. You need someone to do A/V. You need someone with a good internet connection. You need hosts. You need speakers.

If you look at the picture below, you realize that people need to handle more than one role at once.

A picture of my desk during the conference.

A picture of my desk during the conference.Let me explain: What you see there is the entire A/V setup for the conference.

The upper left monitor is the OBS monitor. It handles streaming the content coming in from the lower left laptop, which hosts a Zoom session.

The upper right monitor is the Oversight monitor. That's where I look at comments, discussions, etc. whilst my own laptop (lower-center), joins the Zoom session.

The closed laptop to the right is the post-processing laptop. Any and all conference streams that we've recorded get post-processed as fast as possible, in order to be able to upload them by the time the conference finishes.

In the frame you'll also see a pair of comfortable headphones, an "OK" microphone, and my StreamDeck. These make running the conference a slightly smoother experience. For reference, last year, my phone running the OBS app was used instead of the Stream Deck, which was a pain to deal with. Same with the headphones, last year's headphones were less comfortable than the pair used this year.

Now, another question arises: Why wouldn't I get someone else to do A/V?

The answer to that is quite simple, that would either imply bringing someone to where I host the conference, or having someone available enough, with sufficient bandwidth, and a keen attention to detail there. Then they would have to send me the raw footage for post-processing. In my opinion, it's avoidable overhead, even though that means I have a bit more work to do.

The aftermath

So, what happens after? Well, the post-processing is finished, and the videos are all promptly uploaded to the community's YouTube channel.

Beyond that, the logistics for conference swag need to be put into place, in order to source stickers, t-shirts, and the like for our speakers.

Another thing we like to look at is the metrics. This isn't to see which talks performed better than others, but rather to see which timeslots where globally more attractive to our audience.

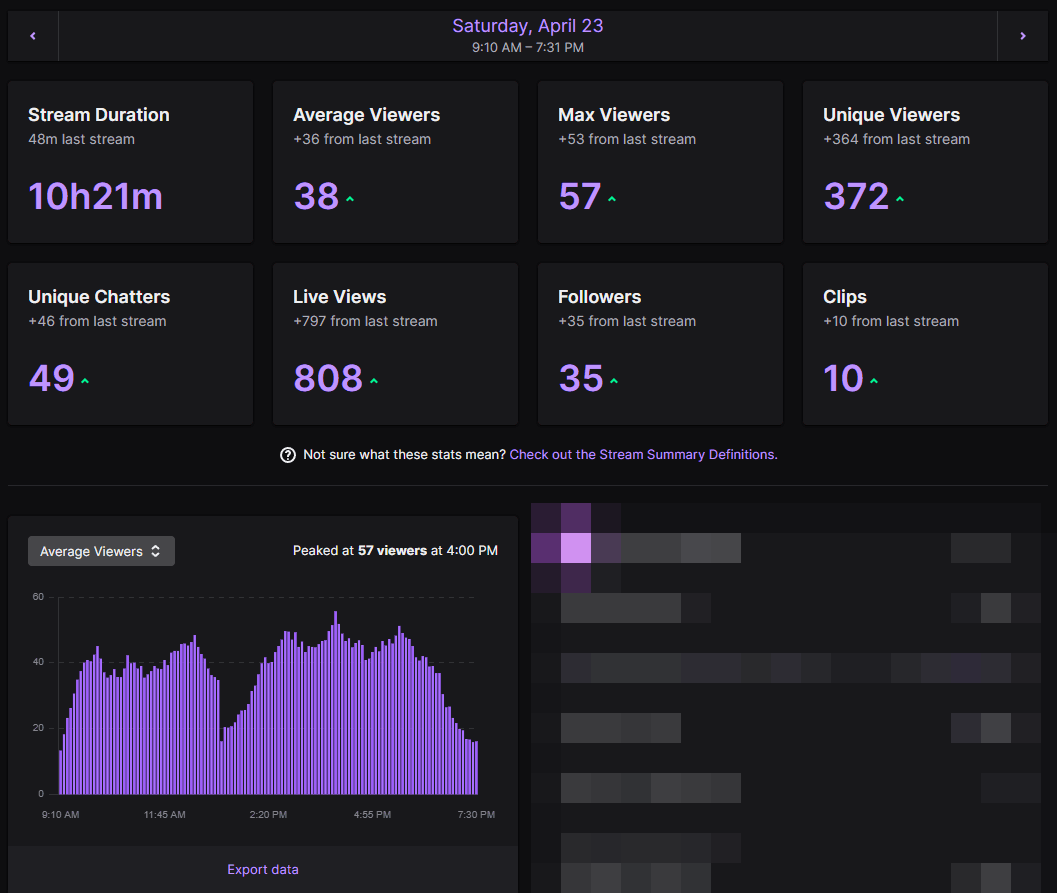

Twitch stream statistics for the first day of the conference.

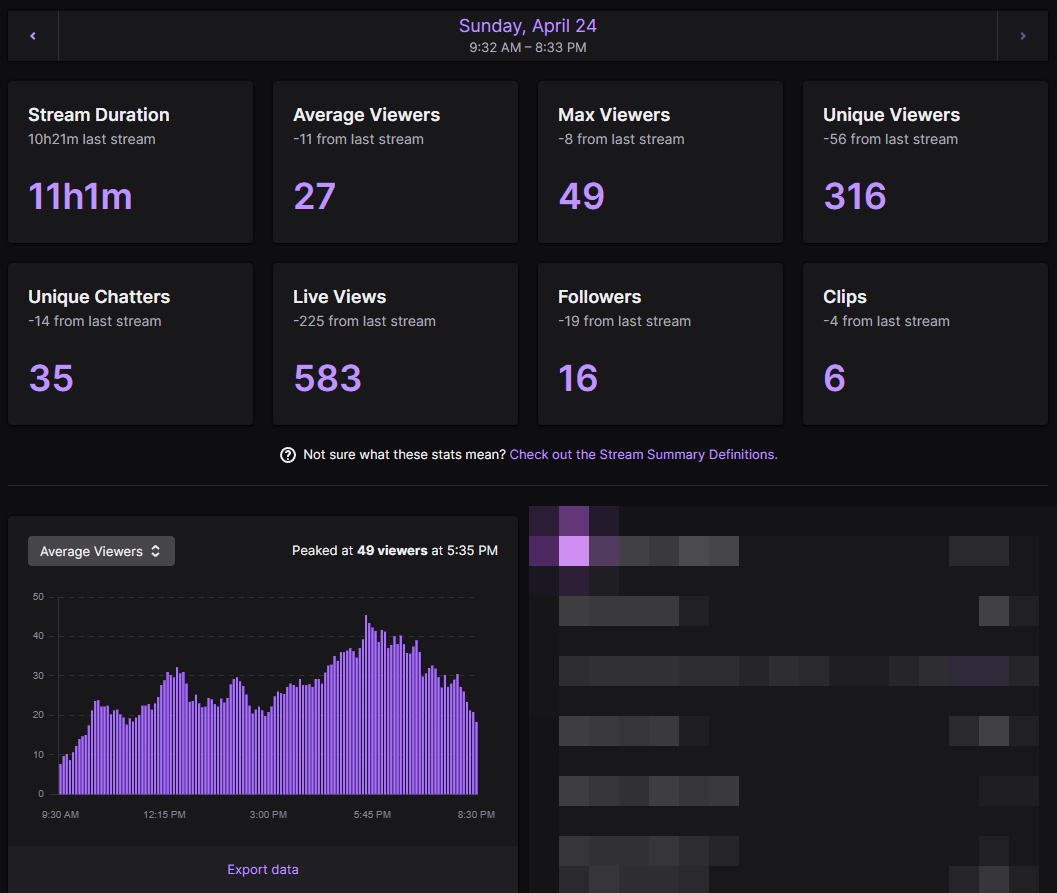

Twitch stream statistics for the first day of the conference. Twitch stream statistics for the second day of the conference.

Twitch stream statistics for the second day of the conference.What can we find out? Well, for starters - and unsurprisingly, Sunday is slightly less favoured by viewers.

Lunch time coincides with a dip in attendance, which makes sense as the only thing playing was music.

People are actively chatting in both streams, so it isn't just background engagement we are getting, which is very interesting.

Finally, we can observe that the streams go on for quite a while, approximately 10 to 11 hours, which is a very long amount of time.

So yeah, that was Digital Overdose Conference 2!